Asynchrony

Up until now, we have been writing examples of code which have run

synchronously. However, in even the most trivial programs which achieve any goal

in the real world, JavaScript runs in a highly asynchronous environment. It

must, in fact – not only is JavaScript single-threaded, but it shares this

single thread with other browser responsibilities such as rendering and

responding to user interaction. We cannot afford for potentially lengthy events,

such as network requests or disk

Additionally, because so much of our code in the browser deals with user interactions, we must utilize a mechanism that allows us to respond to events – like button clicks, mouse moves, or keystrokes in a text field – even if we do not know ahead of time when, or in what order, they will occur.

Execution Contexts & The Stack

In order to understand asynchronous mechanisms, we must first understand the synchronous mechanisms that control program flow and execution order in JavaScript. Execution contexts are records used by the JavaScript engine to track the execution of code. We will most commonly deal with function execution contexts, which associate a particular function with information about its use, such as its lexical environment (the variables it can access) and the arguments with which it was invoked.

Let’s look at an example of the stack of execution contexts created for a very simple execution flow.

function foo() {

bar();

}

function bar() {

baz();

}

function baz() {

qux();

}

function qux() {

console.log('Hello');

}

// call foo

foo();We’ve defined four functions above. Keeping in mind what we know about function hoisting, the order in which they are defined does not matter – what matters is the path of execution created when one function calls another.

In this flow, we are running the script for the first time and thus start in the

global execution context. When foo is called, a new execution context is

created for foo and pushed on top of the context stack. When bar is called

inside of foo, a new execution context is created for bar and pushed on top

of the stack, at which time the execution of foo is suspended and the

execution of bar starts, and so on… This process of stack creation continues

until the function on the top of the stack completes and returns.

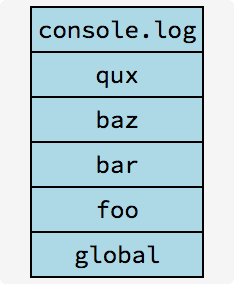

In this example, the last function that is called is console.log. When it is

called, the context stack appears as thus:

After console.log has finished and returns, its context is popped (removed)

from the top of the stack, and execution of the next topmost context is resumed.

In this example, qux has no further operations after console.log, so it too

will then return execution to baz, and so on, until execution reaches the

bottom of the stack at the global context.

In short, execution can be said to run “up” a stack when functions are called, and then back “down” the stack when those functions return.

Of course, any single function may itself call many other functions. Because execution of the current function is paused when entering a new function, this means that some contexts may live on the stack longer than the entire lifetime of multiple other contexts. Consider a second example:

function foo() {

bar();

baz();

}

function bar() {

/* ... */

}

function baz() {

/* ... */

}

// call foo

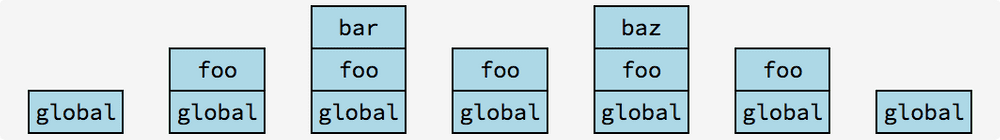

foo();The stack in this example, over time, would appear as:

Notice that the context of foo persists while the contexts above it pop in and

out of existence.

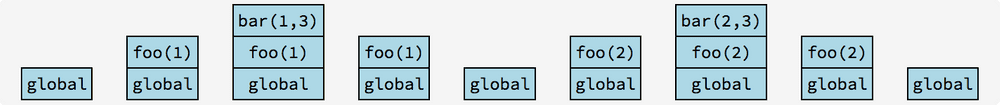

It is important to note that, because an execution context includes information

about how a function is invoked, a new execution context is created every

time a function is invoked. Consider the following example, where calling

foo with different arguments makes it easier to see the different contexts

created for each call.

function foo(a) {

return bar(a, 3);

}

function bar(a, b) {

return a + b;

}

foo(1); // 4

foo(2); // 5

In all of these examples thus far, we have demonstrated synchronous execution. It is important to note that functions in JavaScript exhibit a behavior known as run-to-completion: a synchronous function, once placed on the stack, always executes in its entirety until it either returns or throws a value.

Concurrency vs. Parallelism

We are about to explore ways in which we can break up our programs to run asynchronously – that is, to make different pieces of code run at different times, even if the code happens to be close together lexically.

More specifically, JavaScript offers a concurrent mechanism of execution, meaning that multiple higher-level objectives can be completed (or partially worked on) over a given time period. We will soon see ways to delay part of our code execution until an arbitrarily later time while allowing other parts of code to run in the meantime.

This does not mean that different pieces of code can run in parallel (at the same time). Remember, JavaScript in the browser runs on a single thread, which means that only one function can be executing at any single instant in time. Fortunately, this means that JavaScript developers are protected against a large class of race conditions and other pitfalls present in other languages which allow lower-level control over threading; for example, no JavaScript code that you write will encounter an error where multiple threads of execution try to read from or write to the same variable in memory simultaneously.

The Event Loop

JavaScript exhibits concurrency with a model called the event loop. Let’s define a few concepts which we need in order to discuss the event loop:

- Agent – the host environment in which a JavaScript program is running (e.g., a browser or Node.js process)

- Stack – the stack of execution context frames

- Heap – the large, unstructured blob of memory where JavaScript stores all of the variables currently accessible inside of any closure

- Queue – a queue of tasks waiting to be processed

An agent maintains a task queue, where each task (also called a “message” or “event”) has a single execution context frame as an entry point. The agent takes the first task in the queue, pushes its context onto the stack, and then the JavaScript engine synchronously runs that stack to completion as we described in the previous section. When that task completes (its stack becomes empty), the next task is taken off of the queue and run. The engine continues processing tasks in this manner indefinitely as long as the agent is running. This mechanism is called the event loop because the engine “loops over” all of the incoming events on the queue.

All execution contexts in any stack have access to the same heap of memory. That is, functions called across different tasks retain access to the state of their closure. A given variable in memory on the heap is only garbage-collected by the engine when it detects that the variable can no longer be accessed from any closure.

The tasks in the queue correspond to the asynchronous events we mentioned

earlier – things like network requests, user interaction events, or

Standard agent environments provide a few built-in functions which we can use to

“wrap” function invocations in a new task to be put onto the queue. One of these

is the setTimeout function which, as its name implies, sets up something to

happen after a given amount of time. Its function signature is:

setTimeout(func, delay, …args)

- `func`

- function to be invoked after a time _delay_

- `delay` _optional_

- delay in milliseconds [default `0`]

- `...args` _optional_

- values to provide as arguments to `func` when it is called

function foo() {

console.log('timeout');

}

setTimeout(foo, 500);

console.log('outer');> "outer"

> [ 0.5 sec later ]

> "timeout"Notice that the code does not block or halt when we set the timeout. This code

synchronously gives the agent a reference to the function foo, telling it to

wait 500 milliseconds before enqueueing the function as a task. After this

message is created, the current execution context keeps going, encountering the

log statement on the last line. After about half a second, the agent puts the

new task into the queue and – assuming that there are no other tasks in front

of it – the engine immediately takes it from the queue and invokes it in a new

stack.

The function that we add to the queue is often called a callback function, because we are sending it away to be “called back” at a later time.

We can more clearly demonstrate synchronous run-to-completion behavior by setting a timeout with zero delay (which is also the default, if no delay is provided). Without our knowledge of the event loop, we might expect a timeout callback with no delay to execute immediately, before any later statements.

function foo() {

console.log('timeout');

}

setTimeout(foo, 0);

console.log('outer');> "outer"

> "timeout"As we can see, though, the function placed on the queue is still invoked after the rest of the current execution stack has finished.

With this knowledge of the event loop, we must beware of expensive, long-running

synchronous tasks. The delay parameter in timeouts is not a guaranteed

execution time, rather, it is the time after which the task will be placed onto

the queue. If we set a timeout with a delay, we know that the targeted function

will not execute before that amount of time. However, there may still be code

in the current execution stack that will take more time than the delay to

complete, and it is also possible that entirely new tasks might be put onto the

queue in the meantime. The next example exhibits some of these possibilities;

can you predict the order in which message will be printed to the console?

setTimeout(() => {

console.log('timeout 1000');

}, 1000);

console.log('timeout 1000 scheduled');

// Assume that `expensiveFunction` executes some very CPU-intensive

// code that takes 5 seconds to synchronously finish.

expensiveFunction();

setTimeout(() => {

console.log('timeout 500');

}, 500);

console.log('timeout 500 scheduled');

console.log('initial stack done');Show console

```text > "timeout 1000 scheduled" > [ 5 sec later ] > "timeout 500 scheduled" > "initial stack done" > "timeout 1000" > [ 0.5 sec later ] > "timeout 500" ```There are a few more functions related to setTimeout(), such as

clearTimeout(), setInterval(), and clearInterval(). You can probably

hypothesize as to their functionality… look them up when you get a chance.

Callback Function Pattern

Using callbacks is an extremely common pattern in the highly-asynchronous world

of JavaScript. As previously mentioned in our discussion of setTimeout(), this

pattern gets its name from the fact that we provide a function as an argument

which we want to be “called back” some time in the future after some criteria

are met (a timer finishes, a network request is fulfilled, a user action occurs,

etc.).

Callback functions are often written as inline function expressions as an argument to an asynchronous call, such as

setTimeout(function() {

// Stuff to do in 500ms

}, 500);However, any reference to a function will do:

function callback() {

/* ... */

}

let callback2 = () => {

/* ... */

};

setTimeout(callback);

setTimeout(callback2);To facilitate our discussion in this section, let’s introduce a simple asynchronous use case. Imagine that we want to get a random number from a faraway server. This would require a network request, and because we have no guarantees on how long it would take to complete, we should not stop synchronous execution on the main thread.

We’ll make a small utility function randAsync to simulate this request. Though

our function will actually generate a random number on the same machine, it will

make no difference from the point of view of the user of our function; they

will be able to use the callback pattern in an abstract manner regardless of how

randAsync is implemented internally.

/**

* randAsync - asynchronously generates a random number

* @param callback {function}

*/

function randAsync(callback) {

// When this function is called, `callback` will be a reference

// to a function that we can then invoke whenever we want.

setTimeout(() => {

let randNum = Math.random();

callback(randNum);

}, 1000);

}

randAsync(num => console.log(num));> [ 1 sec later ]

> 0.12345...In non-trivial applications, we usually have complex execution flows comprised of multiple asynchronous steps, where each one depends on a calculated result from the previous step. In this case, we need to make asynchronous calls in series, where one step does not fire until the previous step has finished. In the callback pattern, we can achieve this by nesting callbacks inside of each other.

randAsync(function(num1) {

// When this function is executing, it means we already have num1,

// so now we can make another async call to get num2

randAsync(function(num2) {

// ...

randAsync(function(num3) {

randAsync(function(num4) {

randAsync(function(num5) {

console.log(num1 + num2 + num3 + num4 + num5);

});

});

});

});

});> [ 5 sec later]

> 2.34567...And thus, with our highly-nested callbacks, we begin to construct what is colloquially referred to as a “pyramid of doom” (or “callback hell”).

Error Handling in Async Callbacks

In the scenario above, we created a randAsync function that simulated getting

a random number generated somewhere far away. In reality, not only do have no

guarantee that a network request will be fast; we also have no guarantee that it

will complete successfully at all.

Asynchronous operations almost always deal with tasks that might eventually fail or encounter other errors. In this case, callback functions – and the functions which accept callbacks – need to be able to handle both successful and failure scenarios.

How might we go about extending our randAsync function to simulate a network

error with some probability? Well, we’re already generating a random number

between 0 and 1, so let’s say that any number less than 0.5 indicates an error.

That’s just one if statement – not too difficult to implement.

Once we make an error, how will the user of our function receive it? At first,

we might reach for the throw and try..catch mechanisms that we saw in

function randAsyncThrow(callback) {

setTimeout(function innerTimeout() {

let randNum = Math.random();

if (randNum < 0.5) {

throw new Error('Number is too small!');

} else {

callback(randNum);

}

}, 1000);

}This will certainly throw an error roughly half of the time… but then, how will

a user of the function catch the thrown error? try..catch?

try {

randAsyncThrow(num => console.log(num));

} catch (err) {

console.error('Caught an error:', err);

}This appears as though it may work. If you run

"Number is too small!". Look closely at the errors,

however, and you will notice that they will say "Uncaught Error", and nothing

about "Caught an error" that we described in our catch block. Did these

errors really go uncaught?

As a matter of fact, they did. To understand why, we’ll have to combine our

knowledge of throw with our insight into the event loop. Recall that when a

value is thrown, it alters the synchronous flow of execution in the current

call stack. A thrown value will fall back down the call stack until it reaches

an enclosing try block with an associated catch.

Ah, but in asynchronous callbacks, code no longer runs in the same call stack!

So, in our randAsyncThrow function, in the instant before the new error was

thrown, the currently-executing call stack contained only one function’s

execution context: innerTimeout. The contexts of the function randAsyncThrow

and the script in innerTimeout, it had nowhere further down the call stack to be caught. The

JavaScript engine noticed this and passed the error to our console,

automatically labeling it as uncaught.

This brings us back to our original question: how can the user of our function

handle an error case if we cannot use synchronous try..catch across tasks? It

turns out that, just as a user must provide logic to handle an eventual value

(in the form of a callback), they must also provide logic to handle an eventual

error. There are many ways we could facilitate this, such as requiring the user

to provide two different callbacks (one to invoke with a successful value, and

one to invoke with an error), or simply using the same callback for both success

and error conditions and leave the user the responsibility to detect which

occurred.

Most libraries and other utilities that use callbacks follow an error-first

callback pattern (sometimes called a “Node-style” callback) where the user

provides a single callback that accepts multiple arguments. In the spirit of

making sure that errors are not overlooked, the callback’s first parameter

represents an error condition. If no error occurs, the callback is invoked with

a first argument of null, and the successful value is provided as the second

argument.

Let’s update our randAsync utility to work with this style of callback.

function randAsyncNstyle(callback) {

setTimeout(() => {

let randNum = Math.random();

if (randNum < 0.5) {

callback(new Error('Number is too small!'));

} else {

callback(null, randNum);

}

}, 1000);

}Now, users of this function must provide callbacks that are themselves able to handle both success and error conditions.

let userCallback = function(err, num) {

if (err) {

console.error('Got an error: ', err);

} else {

console.log('Got a number: ', num);

}

};

randAsyncNstyle(userCallback); // let's say this call generated 0.12345

randAsyncNstyle(userCallback); // ... and this one generated 0.56789> [ 1 sec later ]

> "Got an error: Number is too small!"

> "Got a number: 0.56789"This new function works great, and users are able to provide callbacks that can handle errors. But notice that it only took ~1 second for both of the above calls to work. This is because both calls happened synchronously and independently from the same execution context; one call did not wait for the other to complete. Because of this, we might say that these calls were fired “in parallel”, but the terminology here serves only to distinguish this pattern from callbacks that happen serially, one after the other.

Where there are serial dependencies, we must again move to nested callbacks.

randAsyncNstyle(function(error, num1) {

if (error) {

console.log('Error with num1: ' + error.message);

} else {

randAsyncNstyle(function(error, num2) {

if (error) {

console.log('Error with num2: ' + error.message);

} else {

randAsyncNstyle(function(error, num3) {

if (error) {

console.log('Error with num3: ' + error.message);

} else {

let sum = num1 + num2 + num3;

console.log('Sucess: sum = ' + sum);

}

});

}

});

}

});When every callback must handle success and error states, then, at every point of the asynchronous flow, your code logic bifurcates. In the above example, the flow simply stops if there is an error, though in some scenarios we may want to fire off even more asynchronous operations to handle the errors.

Now, instead of a linear flow of steps, we potentially have a decision tree whose number of possible branches grows exponentially with the number of steps involved.

It takes careful consideration to keep callback code organized and readable. But still, overall, the callback pattern is often good enough for some use cases, even in light of newer asynchronous mechanisms that the latest versions of JavaScript provide.

Synchronous Callbacks

While we just explored the use of callbacks in asynchronous use, it is entirely

possible – and not uncommon – to use a callback pattern synchronously as

well. Consider an example where we re-write a version of our previous

randAsync function to operate synchronously, without setting any timeout:

function randSync(callback) {

let num = Math.random();

callback(num);

}

console.log('outer before');

randSync(function inner1(num1) {

// named anonymous functions (for stack trace)

randSync(function inner2(num2) {

console.log('num1 + num2 = ' + (num1 + num2));

});

});

console.log('outer after');> "outer before"

> "num1 + num2 = 0.56789..."

> "outer after"In this case, the entire execution happened synchronously on the same single task in the event loop. If we were to look at the stack at the instant that the sum of the generated numbers were logged to the console, it would appear as

[ console.log ]

[ inner2 ]

[ randSync ]

[ inner1 ]

[ randSync ]

[ global ]Promises

Standardized in ES2015, promises offer a different take on managing asynchronous program flow. The semantics that promises provide allow us to write code that more closely matches how our synchronous brains think about the flow of data through our programs. Most newer and upcoming libraries and HTML APIs utilize promises, so it is well worth getting to know them.

Essentially, a promise is an object which “represents a future value” and provides methods for assigning operations to execute using that value when, and if, it is eventually available.

When a promise is created, it is in a pending state, which means that it does not yet have an internal value. At some point in the future, it may be resolved with a successful value or rejected with an error value. Once a promise is either resolved or rejected, it is considered to be settled and its internal value cannot change.

Most of the time you will work with promises that are already created, whether returned by calls to third-party libraries or from built-in browser or other environment APIs themselves. Even so, we can always build our own promises if needed.

The global Promise constructor accepts a synchronous callback function which

is immediately invoked and injected with two parameters: the functions resolve

and reject. The constructor operates in this way in order to create a closure

over the resolve and reject functions which are unique to this single

promise instance. Any code inside of the constructor callback then has lexical

access to these functions, which can be called at any point in the future, even

from a different task on the event queue. The resolve function can be invoked

with a single argument to resolve its associated promise; likewise, calling the

reject function will cause the promise to be rejected. Notably, once either of

these functions has been invoked, the promise becomes settled and any future

invocations of resolve or reject will have no effect.

// Creates a promise that will resolve with the value 42 after ~500ms

let p = new Promise((resolve, reject) => {

setTimeout(() => resolve(42), 500);

// or

// setTimeout(resolve, 500, 42);

});Promises are sometimes called “thenable” because we operate on them using their

.then() function. The Promise.prototype.then function accepts a then

handler as its first argument, which is a function to be invoked with the

resolved value once it is available. It also accepts an optional catch handler

as a second argument, which will be invoked with a rejected value if the promise

is rejected.

let p = new Promise(resolve => {

console.log('Constructing first promise');

setTimeout(resolve, 500, 42);

});

let thenHandler = function(value) {

console.log(`Resolved value: ${value}`);

};

p.then(thenHandler); // register the handler

let p2 = new Promise((_, reject) => {

console.log('Constructing second promise');

setTimeout(reject, 500, new Error('abc'));

});

let catchHandler = function(err) {

console.log(`Rejected with an error: ${err.message}`);

};

p2.then(thenHandler, catchHandler); // register the handlers> "Constructing first promise"

> "Constructing second promise"

> [ 500ms later ]

> "Resolved value: 42"

> "Rejected with an error: abc"Notice that the promise constructors are run synchronously and the handlers are run asynchronously. In the example above the initial promises are not resolved until about half a second after they are created. However, even if the promises were resolved immediately, any attached handlers would still run asynchronously after the current synchronous task completes as normal.

We can also use the static methods Promise.resolve() and Promise.reject() to

wrap an immediate value in a new resolved or rejected promise, respectively.

let resolvedP = Promise.resolve(42);

let rejectedP = Promise.reject(new Error('abc'));

resolvedP instanceof Promise; // true

rejectedP instanceof Promise; // true

resolvedP.then(val => console.log(`Resolved value ${val}`));

rejectedP.catch(err => console.log(`Rejected error ${err.message}`));

console.log('Handler functions attached');> "Handler functions attached"

> "Resolved value 42"

> "Rejected error abc"In this case, the handler functions were registered on the promises after the promises had already been settled. This behavior is true of all promises – a handler can be attached at any time, even after the promise has been settled.

Similarly, a promise’s .catch() method can also be used to register a catch

handler.

let p = new Promise((_, reject) => setTimeout(reject, 500, new Error('abc')));

p.catch(err => console.log(`Rejected with an error: ${err.message}`));Let us once again revisit our asynchronous random-number-generating use case and write a function which returns a promise for a random number instead of accepting a callback.

/**

* Asynchronously generate a random number

* @return {Promise<number>} Promise for a random number 0.5 <= x < 1

*/

function randAsyncP() {

return new Promise((resolve, reject) => {

setTimeout(() => {

let randNum = Math.random();

if (randNum < 0.5) reject(new Error('Number is too small!'));

else resolve(randNum);

}, 1000);

});

}The .then() and .catch() functions themselves return new promises which

represent the eventual completion of the handler functions provided. The new

promises will themselves be resolved with whatever value is returned from the

execution of the handler functions.

let p = Promise.resolve(42);

let p2 = p.then(val => val * 2);

p2.then(val => console.log(val));Promises can also be chained:

let p = new Promise((resolve, reject) => {

setTimeout(resolve, 500, 42);

});

p.then(value => {

console.log(`Resolved with ${value}`));

return value * 2;

})

.then(value =>console.log(value))

.catch(error =>console.log(`Rejected due to ${error}`));

console.log('Promise chain constructed');> "Promise chain constructed"

> [ 500ms pass ]

> "Resolved with value 42"

> "84"Observe the pattern of the chain of .then()s. Each one returns a new

Promise which is also “thenable”, so we can create a linear sequence of then

handlers to run async operations one after another. The values returned by each

handler are used to resolve these intermediate promises, which then moves

control down the chain to the next then handler. At any point, a .catch() can

be used to catch promise rejections or thrown errors.

let p = new Promise((resolve, reject) => {

setTimeout(resolve, 500, 42);

});

p.then(value => {

console.log(`Resolved with ${value}`));

if(value < 50) throw new Error('Less than 50');

return value * 2;

})

.then(value =>console.log(value))

.catch(error =>console.log(`Rejected due to ${error}`));

console.log('Promise chain constructed');> "Promise chain constructed"

> [ 500ms pass ]

> "Resolved with value 42"

> "Rejected due to Error: Less than 50"We can save references to any intermediate point in the promise chain and use it to start a new branch in the control flow.

let p = new Promise((resolve, reject) => {

setTimeout(resolve, 500, 42);

});

let p2 = p.then(value => {

console.log(`Resolved with ${value}`));

return value * 2;

});

p2.then(value =>console.log(value));

p2.then(value =>console.log(`Also ${value}`));

console.log('Promise chain constructed');> "Promise chain constructed"

> [ 500ms pass ]

> "Resolved with value 42"

> 84

> "Also 84"Notice the subtle, but important, difference:

p.then(/*...*/).then(/*...*/);creates a linear sequence; p will resolve, then the first handler will run

given the resolved value of p, then the second handler will run given the

resolved value returned from the first handler.

On the other hand,

p.then(/*...*/);

p.then(/*...*/);expresses two branches in the control flow; both of the then handlers will

execute with the resolved value of p.

You can attach handlers to a promise even after it has already been fulfilled, in which case the handler will be immediately appended to the job queue at the end of the current message in the event loop.

The global Promise object has the built-in methods Promise.all() and

Promise.race(), which can be used, respectively, to wait for fulfillment of a

collection of promises, or “race” a collection of promises and take the first

one which fulfills.

With these capabilities of promises, you can create asynchronous flows of data and control which are short and linear, or large, complex, and branching – it all depends on what your application needs. Promises are a mechanism we can use to express these flows in code in a manner that allows us to more easily communicate and reason about them.

I highly recommend reading the article Exploring ES6 Promises In-Depth by Nicolás Bevacqua, listed in the Resources section of the Appendix.